Digital Oscillator Deep Dive

It doesn't get more "cycles" than sinusoids. This post is about efficiently generating sine waves with the fewest CPU cycles, something I investigated while preparing my presentation for the Signal Processing Summit and DSP Online Conference.

My original desktop program to sonify the tides simply used the C

standard library cosine function, cos().1 When I ported that to

the Teensy 3.2 with an audio adapter in 2016 that didn't perform well

enough and I had to resort to a Taylor series approximation run it in

real-time on that device.

I've learned some new tricks since then.

First, the Levine-Vicanek quadrature oscillator can output a new sine-cosine pair with just three multiplies and three additions using only the last output pair. It reduced the total run-time of my tide sonification program by 87% on my laptop.

Rick Lyons wrote about it on DSPRelated.com in 2023 and provided a new proof of it's stability. Vicanek sketched a different stability proof in his 2015 paper, but it took me a while to understand it.

Second, just two days before I presented at the Signal Processing Summit I stumbled across the a recurrence relation for cosine in Real Computing Made Real, by Forman S. Acton, p. 173:

\[\cos((n+1)\theta) = 2\cos(\theta)\cos(n\theta)-\cos((n-1)\theta)\]

This outputs a new cosine value with just one multiply and one addition using the two previous outputs.2 I raced to implement it and included the performance results as a bonus slide: a 90% reduction in total run-time. Two of them running in parallel offset by 90 degrees would give a sine-cosine pair in just two multiples and two additions.

I would not have understood the utility of this equation before I implemented the Levine-Vicanek quadrature oscillator to optimize my tide sonification program.

History

Acton didn't attribute the formula to anyone, but Wikipedia calls it the "Chebyshev method", citing Ken Ward's page on trigonometric identities. He chose that name simply because Chebyshev clearly knew the identity, but it was known well before him. Clay Turner calls it the "biquad oscillator" and attributes the formula to François Viète. Glen Van Brummelen also attribute this recurrence relation to Viète.

Boyer and Merzbach (2nd ed, p. 309) also attribute it to Viète and show his geometric proof of the Prosthaphaeresis Formulas from his Canon mathematicus published in 1579.3 These were used to quickly multiply (or divide) numbers by changing to an addition (or subtraction) and helped inspire logarithms for the same purpose. The formula using cosine is:

\[2\cos(A)\cos(B)=\cos(A+B)+\cos(A-B)\]

A simple rearrangement and setting \(A=n\theta\) and \(B=\theta\) gives the above form for the recurrence relation:

\[\cos(A+B)=2\cos(B)\cos(A)+\cos(A-B)\]

\[\cos((n+1)\theta)=2\cos(\theta)\cos(n\theta)-\cos((n-1)\theta)\]

However, Boyer and Merzbach attribute this cosine formula even earlier to the Egyptian mathematician ibn-Yunus around the year 1008 (2nd ed, p.240). His Wikipedia page also mentions this formula, but that is not currently reflected in the other Wikipedia pages.

Branko Ćurgus at WWU provides a neat and simple derivation of the recurrence relation using modern notation (see equation "(rr)").

Stability Proof

Both Vicanek and Lyons provided a proof for the quadrature oscillator stability, but I have not seen a similar proof for the ibn-Yunus-Viète-Chebyshev recurrence relation.

This is a block diagram for the Viète-Chebyshev recurrence relation, with a dummy \(x(n)\) added4:

Here's a stability proof for the Viète-Chebyshev recurrence relation that follows Lyons' proof fairly closely.

Starting with the trigonometric form of the recurrence relation:

\[\cos((n+1)\theta) = 2\cos(\theta)\cos(n\theta)-\cos((n-1)\theta)\]

Renumbering and renaming: \[y_n = 2\cos(\theta) y_{n-1} - y_{n-2}\]

Let \(k=2\cos(\theta)\).

\[y_n = k y_{n-1} - y_{n-2}\]

Finding the transfer function using Rick Lyons' fake \(x(n)\) impulse response trick:

\[y_n = k y_{n-1} - y_{n-2} + x_n\]

Take the z-transform:

\[Y(z) = k Y(z)z^{-1} - Y(z)z^{-2} + X(z)\]

Solve for \( H(z)=Y(z)/X(z) \): \[X(z) = Y(z) - k Y(z)z^{-1} + Y(z)z^{-2}\]

\[\frac{X(z)}{Y(z)} = 1 - k z^{-1} + z^{-2}\]

\[H(z) = \frac{Y(z)}{X(z)} = \frac{1}{1 - k z^{-1} + z^{-2}}\]

Multiply by \(z^2/z^2\) to get positive exponents: \[H(z) = \frac{z^2}{z^2 - k z + 1}\]

This gives two zeros at the origin and two poles.

Factoring to find the poles, we need to find \(a\) and \(b\) such that \(a b=1\) and \(a+b=-k=-2\cos(\theta)\):

\[H(z) = \frac{z^2}{(z+a)(z+b)}\]

Both \(k\) and \(1\) are real, so \(a+b\) is on the real axis. Because magnitudes multiply and phases add when multiplying complex numbers, \(|a|=|b|=1\), and \(\arg(a)=-\arg(b)\).

We can show that the complex conjugate pair \(1e^{\pm j \theta}\) satisfies these requirements using quadratic factorization (Lyons, p. 255):

\[f(s) = as^2 + bs + c = \\ \left( s + \frac{b}{2a} + \sqrt{\frac{b^2-4ac}{4a^2}} \right) \cdot \left( s + \frac{b}{2a} - \sqrt{\frac{b^2-4ac}{4a^2}} \right) \]

We have:

\begin{align*} z^2 - k z + 1 &= \left( z - \frac{k}{2} + \sqrt{\frac{k^2-4}{4a^2}} \right) \cdot \left( z - \frac{k}{2} - \sqrt{\frac{k^2-4}{4a^2}} \right) \\ &=\left( z - \cos(\theta) + \sqrt{\cos^2(\theta)-1} \right) \cdot \left( z - \cos(\theta) - \sqrt{\cos^2(\theta)-1} \right) \end{align*}Since \(\cos^2(\theta) + \sin^2(\theta) = 1\), \(\sqrt{\cos^2(\theta)-1}=\sqrt{-\sin^2(\theta)}=j \sin(\theta)\).

So we have:

\begin{align*} z^2 - k z + 1 &= \left( z - \cos(\theta) + j\sin(\theta) \right) \cdot \left( z - \cos(\theta) - j\sin(\theta) \right) \\ &= (z-e^{j\theta})(z-e^{-j\theta}) \\ &= z^2 - e^{j\theta}z-e^{-j\theta}z + e^{j\theta}e^{-j\theta} \\ &= z^2 - (e^{j\theta}+e^{-j\theta})z + 1 \\ &= z^2 - 2\cos(\theta) + 1 \\ &= z^2 - k z + 1 \end{align*}And so \(1 e^{\pm j\theta}\) are indeed the poles of our transfer function. Because the poles lie on the unit circle the system is stable.

The poles are never calculated directly, only \(2\cos(\theta)\) (and the initial outputs), so the poles will always land on the unit circle even with limitations in numerical precision. It's the structure of this recurrence relation that gives the stability. However, numerical precision will limit the frequency accuracy and lead to frequency drift from the desired frequency.

The discriminant of the quadratic formula determines whether the roots are complex or real. Because the range of cosine is \([-1, 1]\), the range of the discriminant \(b^2-4ac = 4\cos^2(\theta)-4\) is \([-4, 0]\). When the discriminant is negative, there are two complex conjugate roots. When the discriminant is zero, at the extreme of \(\theta=0\), those roots meet and form a single root at 1. In this case, the oscillator will output a constant value.

Difference Equations

I later learned that difference equations techniques (similar to differential equations but discretized) can also be used to analyze this recurrence relation, as described on this Wikipedia page. In particular, the "characteristic polynomial" gives a more direct way to find the transfer function's denominator, \(z^2 - k z + 1\).

Given:

\[y_t=a_1 y_{t-1} + \cdots + a_n y_{t-n}\]

the characteristic polynomial is:

\[p(\lambda) = \lambda^n - a_1\lambda^{n-1} - a_2\lambda^{n-2} - \cdots - a_n\]

So the characteristic polynomial for \(y_n = k y_{n-1} - y_{n-2}\) can be directly determined as:

\[p(\lambda) = \lambda^2 - k \lambda + 1\]

And a simple variable change gets us to:

\[p(z) = z^2 - k z + 1\]

Experimental Results

Beware of bugs in the above code; I have only proved it correct, not tried it.

–Donald Knuth

Of course I had to experimentally validate these oscillators and compare their performance.

For these experiments I have used a phase accumulator (unwrapped between 0 and \(2\pi\)) and sine and cosine functions as the reference signal. Of course, this will also drift somewhat from the ideal phase due to fixed numerical precision in the accumulator, but it is still a reasonable reference.

Note: The plots below use a sample rate of 44.1kHz, but I plan to replace them with normalized frequency plots in the future.

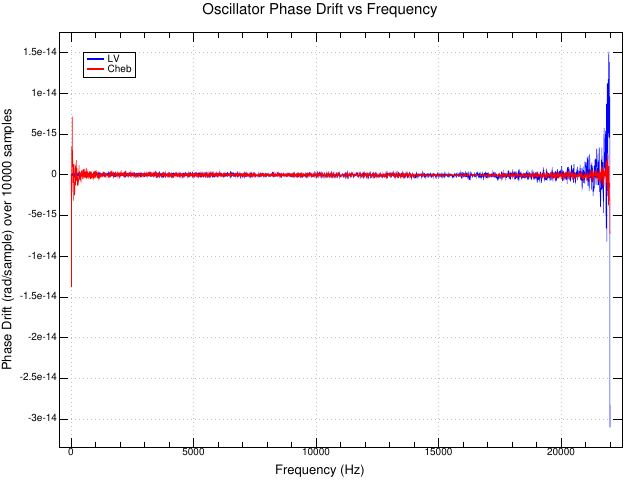

This plot shows how phase error (calculated with atan2())

accumulates in the Levine-Vicanek ("LV") and "Cheb" quadrature

oscillators compared to the trig function phase accumulator at 1 kHz

and a 44.1 kHz sample rate:

A few things to notice:

- The Cheb oscillator has a noisy sinusoidal component in their phase, likely from the two free-running sinusoids.

- The LV oscillator has a much less noisy phase error, because the two outputs are coupled and influence each other at each step. Vicanek mentions this effect in his paper.

The slope of the above graph varies with frequency, sometimes positive and sometimes negative. Sweeping over frequency and fitting a line to each slope gives the phase drift as a function of frequency for the two oscillators:

For the Levine-Vicanek oscillator, a 440 Hz tone at 44.1 kHz accumulates 1.68e-7 radians of error each second. At that rate it takes 11.6 years to accumulate a whole cycle of error.

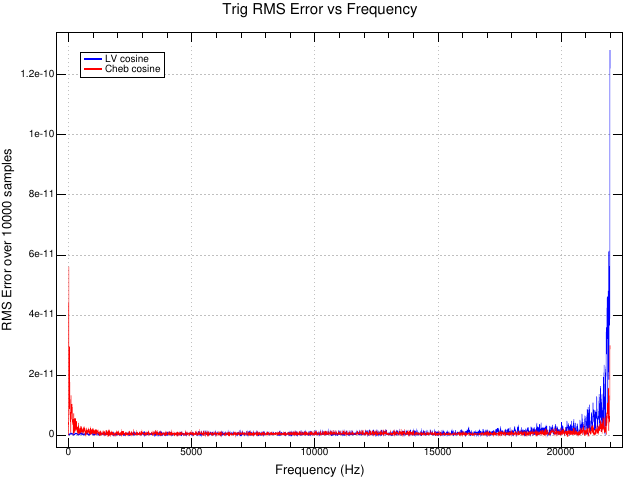

This plot shows the RMS error between the oscillator cosine outputs

and the cos() trig function as a function of frequency:

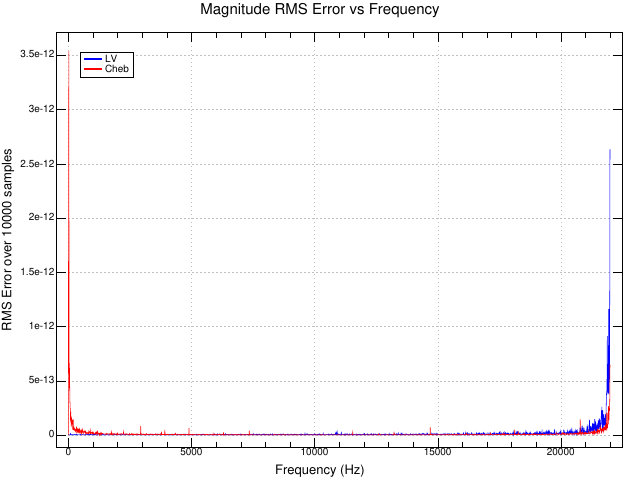

This plot shows the RMS error from the ideal complex magnitude of unity for the two oscillators as a function of frequency:

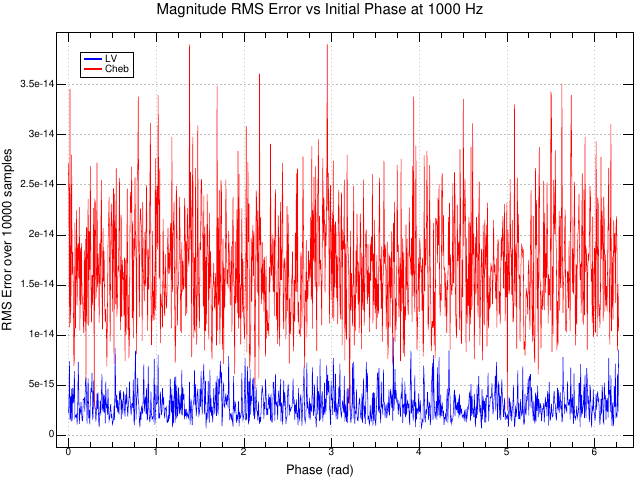

This plot shows the same RMS error from the ideal complex magnitude of unity for the two oscillators, but as a function of starting phase at a fixed frequency of 1000 Hz:

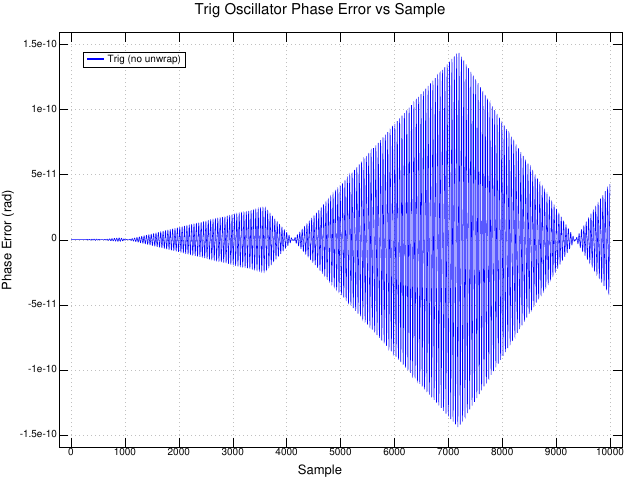

For reference, this plot shows how poorly the trig functions perform when you neglect to unwrap the phase accumulator. It compares the output of the trig functions with and without unwrapping the phase accumulator. There are large jumps in phase error as the phase accumulator grows and the trig function produces less accurate results. Clearly the range reduction inside the library function isn't working well, and this makes sense when you consider that a large phase accumulator value has fewer bits of precision for the portion of the value modulo \(2\pi\).

The takeaway here is that the drift performance of the two quadrature oscillators is quite good compared to sloppy handling of a phase accumulator and that phase accumulators should be unwrapped.

Source Code

For these experiments I started with my usual blend of C, Awk, Make, and Gnuplot, but eventually switched to the J language, because it made exploring them faster. J is a descendant of APL, both designed by Ken Iverson and collaborators. I will post the C code in later post about my tides code.

The J code is in osc.ijs. This snippet shows the oscillator implementations:

NB. Levine-Vicanek Quadrature Oscillator NB. Notation from "A New Contender in the Quadrature Oscillator Race" NB. by Richard Lyons. NB. f_hz: oscillator frequency NB. p_rad: phase NB. s_hz: sample rate lv_init=: 3 : 0 "1 'f_hz p_rad s_hz' =. y rps=.(2p1*f_hz)%s_hz NB. radians per sample rad=. 2p1#:!.0 p_rad NB. Unwrap phase. (2 o. rad), (1 o. rad), (3 o. rps%2), (1 o. rps) NB. u v k1 k2 ) lv_step=: 3 : 0 "1 'u v k1 k2'=. y w=. u - k1 * v nv=. v + k2 * w nu=. w - k1 * nv nu, nv, k1, k2 ) lv_osc=: 3 : 0 "1 0 lv_osc y : NB. One-liner: NB. (j./)@:(2&{.)"1 lv_step^:(<x) lv_init y lv=. lv_step^:(<x) lv_init y (0{"1 lv) j. (1{"1 lv) ) NB. Chebyshev Cosine Recurrence Relation NB. From Acton, p. 173, Recurrence Relations. NB. x: 1 for sine, 2 for cosine NB. f_hz: oscillator frequency NB. p_rad: phase NB. s_hz: sample rate NB. v0: current value NB. v1: previous value NB. k1: step coefficient cheb_init=: 3 : 0 "1 2 cheb_init y : 'f_hz p_rad s_hz' =. y rps=.(2p1*f_hz)%s_hz NB. Radians per sample rad=. 2p1#:!.0 p_rad NB. Unwrap phase. (x o. rad), (x o. rad - rps), 2 * (2 o. rps) NB. v0 v1 k ) cheb_step=: 3 : 0 "1 'v0 v1 k'=. y ((k * v0) - v1), v0, k ) cheb_osc=: 3 : 0 "1 0 cheb_osc y : cos=. {."1 cheb_step^:(<x) 2 cheb_init y sin=. {."1 cheb_step^:(<x) 1 cheb_init y cos j. sin ) NB. Trig "oscillator" NB. f_hz: oscillator frequency NB. p_rad: phase NB. s_hz: sample rate NB. cos: cosine NB. sin: sine NB. rad: phase in radians NB. rps: omega (radians per sample) trig_init=: 3 : 0 "1 'f_hz p_rad s_hz' =. y rps=.(2p1*f_hz)%s_hz NB. Radians per sample rad=. 2p1#:!.0 p_rad NB. Unwrap phase. (2 o. rad), (1 o. rad), rad, rps NB. cos sin rad rps ) trig_step=: 3 : 0 "1 'cos sin rad rps'=. y rad=. 2p1#:!.0 rad+rps NB. Increment and unwrap phase. (2 o. rad), (1 o. rad), rad, rps ) trig_osc=: 3 : 0 "1 0 trig_osc y : trig=. trig_step^:(<x) trig_init y (0{"1 trig) j. (1{"1 trig) )

Footnotes:

In truth, I accidentally used sin() because I didn't read the

NOAA documentation closely enough. The mechanical tide computers all

use sine, because the Scotch yoke mechanism moves the pulleys up and

down, but the prediction equations all use cosine.

I was in book heaven, aka Powell's Books in Portland, OR the weekend before the conference. They used to have a whole technical bookstore in a separate building, but even after they merged it into the main store it's still huge. I was about to leave when I found a print copy of NOAA's Special Publication No. 98: Manual of Harmonic Analysis and Prediction of Tides from 1940. Then I scanned the shelves for a few more hours to make sure I didn't miss any other gems.

Boyer and Merzbach's A History of Mathematics is one of my favorite books. I discovered the 2nd edition in my community college library twenty years ago and quickly bought my own copy. I study it periodically as my mathematics knowledge grows.

I don't have any tattoos, but Viète's geometric proof of the Prosthaphaeresis Formulas has been a top contender for my first since about 2013, because those formulas describe how RF mixers output the sum and difference frequencies when multiplying two sinusoids. Now that I know that same geometric proof describes this recurrence relation I am even more tempted to get it.

There are some obvious similarities to the Goertzel block diagram here and the cosine factor is the same. In fact, I made this diagram by trimming the Pikchr code for my Goertzel diagram. The similarities are probably why Turner used the term "biquad oscillator": they are both subsets of the Direct Form II biquad filter structure.